As automation and artificial intelligence reshape the workforce, a Nobel laureate suggests that future generations may enjoy more free time and fewer traditional jobs.

On a serene morning in Stockholm, a Nobel Prize-winning physicist observes a robotic arm pouring coffee with remarkable precision. This small act serves as a microcosm of a much larger transformation taking place in the world of work.

“Your grandchildren will probably work less than you,” he states calmly. “Maybe a lot less.”

While offices outside buzz with activity and deadlines loom, inside research labs and warehouses, machines are increasingly capable of performing tasks that once required human intellect. From drafting emails and analyzing contracts to diagnosing illnesses and even generating software code, the capabilities of automation are expanding rapidly.

The pressing question many individuals find themselves pondering is no longer a matter of science fiction: If machines can do my job, what happens to me?

A Structural Shift, Not Just Another Tech Cycle

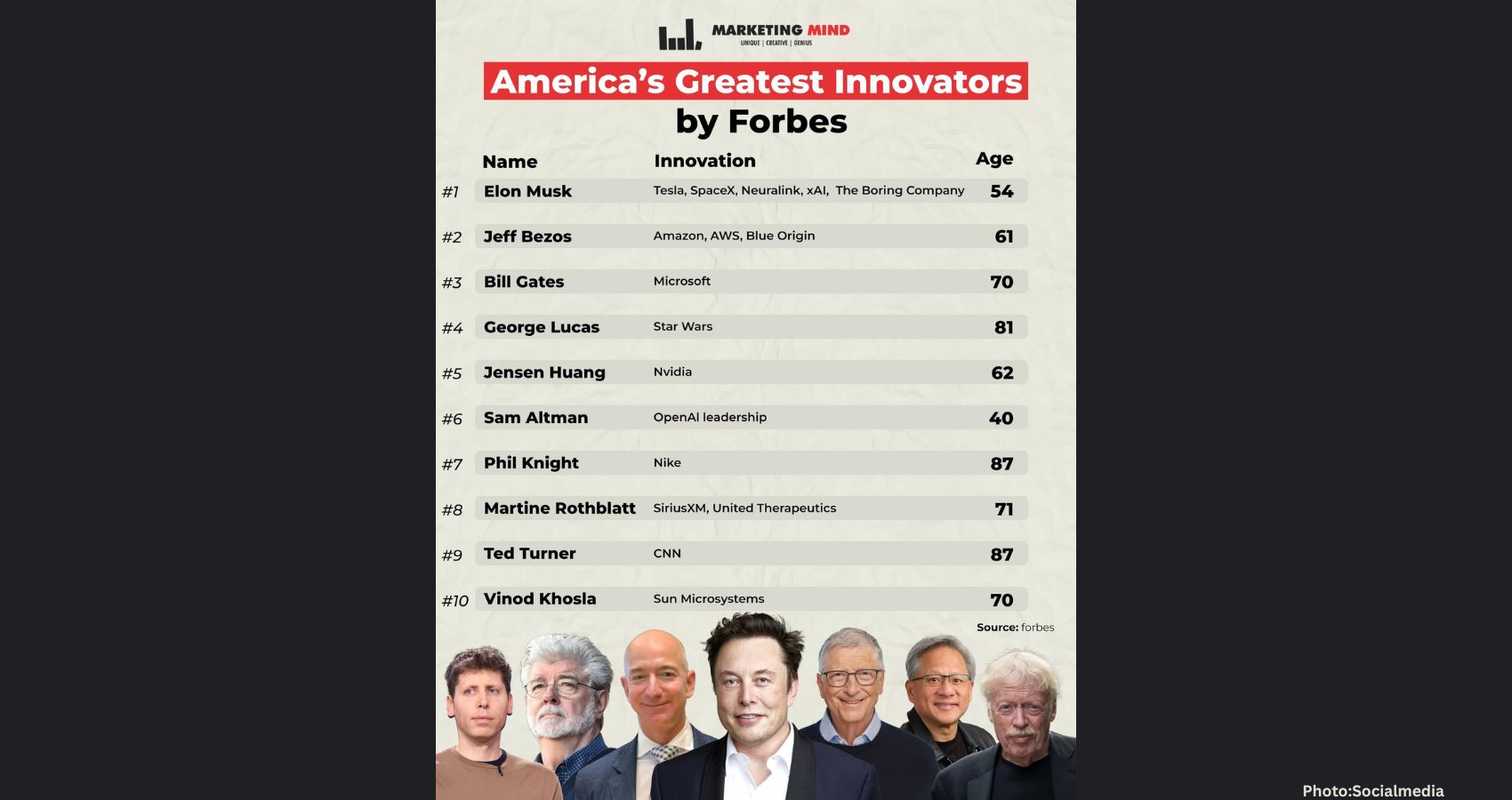

When Nobel laureates align their views with influential figures like Elon Musk and Bill Gates, it captures public attention. Several esteemed scientists, including theoretical physicist Giorgio Parisi, contend that the rise of artificial intelligence and robotics signifies a shift akin to the Industrial Revolution rather than merely an evolution of technology.

Musk envisions a future characterized by “universal high income,” where the necessity of work becomes optional. Gates similarly foresees AI systems generating “a lot of free time” by managing mundane tasks.

According to these Nobel physicists, productivity is set to soar, human labor hours will diminish, and the conventional notion of a lifelong job may not endure through the century. The trajectory they suggest points toward a future with significantly less compulsory work.

Automation Is Already Here

The evidence of this shift is evident and does not require a telescope to observe. Modern warehouses operate with fleets of autonomous robots, while call centers utilize AI agents to manage thousands of conversations simultaneously. Hospitals are deploying algorithms to analyze scans and identify anomalies.

Historically, automation has eliminated certain jobs while creating new ones; farmers transitioned to factory workers, and factory workers evolved into office employees. However, this time, the landscape may be different.

AI is not limited to replacing physical labor; it also takes on cognitive tasks. It can draft reports, design systems, optimize logistics, and even write self-improving code. Consequently, the economy may maintain or even increase productivity with fewer full-time workers, leading to a society that is richer in productivity but potentially poorer in traditional employment opportunities.

The Paradox of Abundance

Theoretically, this shift should yield greater prosperity. If machines can produce more with less human labor, everyone stands to benefit. Yet, wages remain tethered to hours worked, raising concerns about income distribution. Musk refers to this era as the “age of abundance,” while economists explore models for guaranteed income or taxation of AI-driven capital.

The more profound question, however, is psychological: What occurs when work ceases to be the organizing principle of daily life?

The Hidden Risk: Emptiness

Jobs, even those that are less than ideal, provide a structure to our lives—waking up, commuting, completing tasks, taking breaks, and experiencing small victories. Removing this structure can lead to a sense of disorientation.

The potential danger of a world with fewer jobs is not laziness but rather a sense of meaninglessness. Without intentional design, free time may devolve into passive consumption—endless scrolling, distractions, and algorithm-driven habits.

A Nobel laureate recently articulated this concern: “I’m not afraid of machines working. I’m afraid of humans forgetting what to do when they are not working.”

How to Prepare for a Low-Work Future

If automation continues on its current trajectory, preparation may shift from traditional career paths to resilience. Discussions among technologists, economists, and scientists often highlight three key themes:

First, individuals should cultivate skills driven by curiosity rather than solely for employment. Interests such as art, language, gardening, programming, and music can endure beyond the fluctuations of job markets.

Second, prioritizing financial stability over status can provide flexibility in a world characterized by shifting roles and shorter contracts.

Lastly, strengthening community ties becomes essential as traditional work structures weaken. Those who thrive may not be the busiest individuals today but rather those who have learned to navigate life without constant direction.

A Future That Feels Like a Long Sunday

Imagine a weekday that resembles a leisurely Sunday afternoon. Your AI assistant has efficiently sorted your inbox, autonomous vehicles glide silently outside, and grocery stores operate largely through automation.

You may still work, but perhaps only 10 to 15 focused hours per week, engaging in distinctly human activities such as creativity, empathy, negotiation, and invention. Income might derive from state support or productivity-sharing mechanisms, supplemented by flexible, chosen contributions.

This future will not arrive abruptly; rather, it will gradually unfold—one automated system at a time.

A Civilizational Crossroads

For centuries, technological advancements have reduced the need for physical labor. Electricity, machinery, and computing have consistently shortened work hours. We may now be approaching a pivotal moment where compulsory labor declines significantly.

The central challenge is no longer merely about how we earn a living but rather how we derive meaning when work is no longer the core of our identity. The traditional 40-year, full-time career may prove to be a fleeting historical phase.

The next phase prompts a deeper inquiry: If work becomes optional, what will give life its purpose?

As experts continue to analyze these shifts, the implications for society remain profound. Will AI eliminate most jobs? While many routine tasks are already automated, experts suggest that total human working hours may significantly decline. Will individuals personally lose their jobs? It is more likely that unstable, contract-based, or part-time work will replace lifelong employment. Which jobs are more resilient? Roles requiring complex human interaction, creativity, care, and physical presence tend to adapt more slowly to automation. Ultimately, whether less work is beneficial depends on income policy, social structures, and how individuals choose to utilize their newfound free time. Managed effectively, it could enhance well-being; poorly managed, it could exacerbate inequality and social disconnection.

These insights reflect the evolving landscape of work and the need for society to adapt to a future where the nature of employment is fundamentally transformed, according to GlobalNetNews.