Last month, reports emerged indicating that major corporations like Walmart, Starbucks, Delta, and Chevron had begun utilizing AI systems to monitor employee communications. This revelation sparked immediate concern among employees and workplace advocates regarding potential privacy infringements. However, experts assert that while the implementation of AI tools may introduce novel efficiencies and raise ethical and legal dilemmas, the monitoring of employee conversations is not a new practice. David Johnson, a principal analyst at Forrester Research, notes, “Monitoring employee communications isn’t new, but the growing sophistication of the analysis that’s possible with ongoing advances in AI is.”

A recent study by Qualtrics revealed contrasting attitudes toward AI software in the workplace. Managers exhibit enthusiasm for its potential, whereas employees express apprehension, with 46% describing its use as “scary.” Johnson emphasizes the importance of trust, stating, “Trust is lost in buckets and gained back in drops, so missteps in applying the technology early will have a long tail of implications for employee trust over time.”

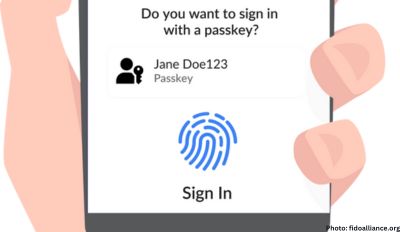

Aware, a startup established seven years ago, is integrating AI into common work-related platforms such as Slack, Zoom, Microsoft Teams, and Meta’s Workplace. Collaborating with companies like Starbucks, Chevron, and Walmart, Aware’s product aims to detect various issues ranging from bullying and harassment to cyber threats and insider trading. According to Aware, data remains anonymous until the technology identifies instances requiring attention, at which point it alerts HR, IT, or legal departments for further action.

Companies like Chevron, Starbucks, Walmart, and Delta have disclosed their utilization of Aware’s technology for purposes such as monitoring public interactions on internal platforms, enhancing employee experiences, ensuring community safety, and tracking trends among employees. Additionally, other services like Proofpoint employ AI to monitor cyber risks and enforce company policies regarding AI tool usage, thus addressing concerns regarding data security.

Despite the potential benefits, the integration of AI in the workplace raises apprehensions among employees regarding surveillance. Reece Hayden, a senior analyst at ABI Research, acknowledges the possibility of a “big brother effect,” potentially impacting the candidness of employee communications on internal messaging services.

The use of AI for employee monitoring represents a contemporary iteration of longstanding practices. Social media platforms like Meta have employed similar techniques for content moderation, albeit facing criticism for inadequacies. Moreover, companies have monitored employee behavior on work systems since the advent of email, even extending to browser activity. However, the integration of advanced AI tools directly into employee workflows facilitates real-time analysis of vast datasets, providing insights into trends and discussions.

Hayden suggests that companies’ interest in monitoring employee conversations stems from a desire for real-time insights into workforce dynamics, aiding in the formulation of internal strategies and policies. Nevertheless, Johnson emphasizes the paramount importance of gaining and maintaining employee trust amidst the implementation of AI technologies. Organizations must exercise caution and prudence in their approach to avoid eroding trust through perceived surveillance and punitive actions based on AI-derived insights.

While the deployment of AI for employee monitoring introduces unprecedented capabilities and challenges, it also necessitates a careful balance between operational efficiency and safeguarding employee privacy and trust.